[R] Wayback Machine - Archive all Links from a Single Website Project

Abstract / TL;DR

Short proof-of-concept on how to batch-archive a set of URLs with Internet Archive's Wayback Machine & R / RCrawler

- 1 Use Case: Archive All Links from a Single Web Project

- 2 Theory: Crawl - Extract - Archive

- 3 “Minimum Viable Demo” - fetchURLs(target) & archiveURLs(URLs)

- 4 Questions/TBD

Long time no see, R…. Well, not really, but I’ve been heads down working on some larger projects lately and didn’t have the time to blog. 1 of the projects should be published soon (plenty of hill-shading, maps & data viz), 1 is still in the making (something JS-intense with Google Apps Script & clasp), but 1 - which is my biggest gig so far - is online now with all features (incl. my first-ever scrollytelling implementation) and will serve as my use case in this post.

1 Use Case: Archive All Links from a Single Web Project

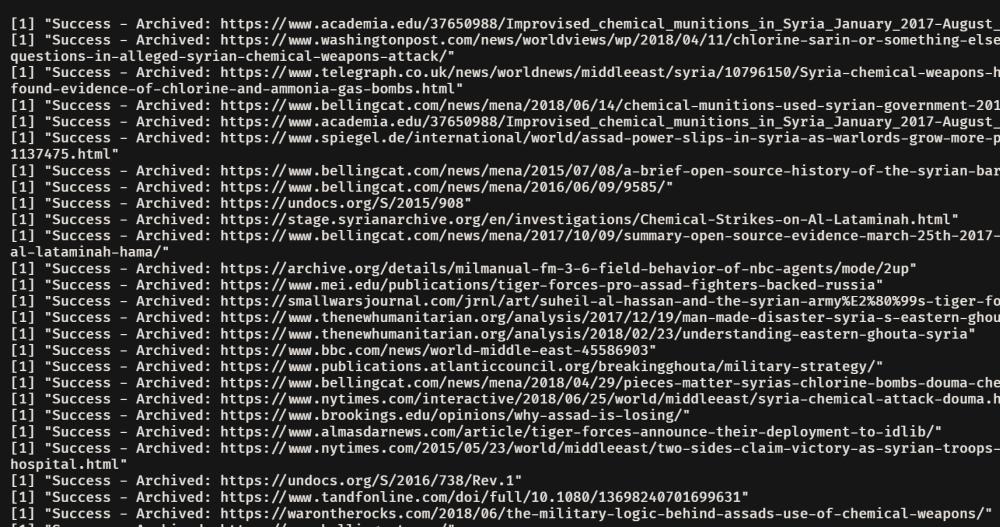

"Success - Batch Archiving external URLs with the Wayback Machine API & R / Rcrawler

Inspired by a session during this week’s epic csv,conf v5 remote conference event (really, omg, this experience would have been hard to top even pre-Corona), I wanted to test how easy it would be to batch-archive a set of external links from a single website project with the Wayback Machine API & R. In her talk on “Data Archival from a journalist’s perspective”, Soila Kenya discussed the necessity and ways to preserve web content esp. in the case of interactive pieces (i.e. by screen-capturing the user journey in the same manner as Gamers do in Minecraft!).

The web project’s core - GPPI’s rigorous research on Chemical Weapons attacks in Syria - is evidence-heavy (closed and open sources), and while almost all links in the CSV dataset have already been archived, most of the external (=outbound) web links weren’t. As websites are constantly re-designed and re-structured, link rot is a thing. Isn’t that a purrrfect reason to get started with using the Wayback Machine API?

So what’s to do? As R users we are usually blessed with a legion of user-friendly wrapper packages for most of our use cases. But in the case of Internet Archive’s Wayback Machine API I was a bit surprised. There seems to be only one actively maintained package on CRAN (rOpenSci’s internetarchive), but it “just” allows to search and download archived content, not add your own. Short from starting to write my first R package, I tried to figure out what’s already possible with the given set of options.

2 Theory: Crawl - Extract - Archive

In theory, the approach is a simple 3-step process:

- crawl all (or a subset of) pages of a single website / web project (let’s focus on HTML content firat; JS reuires more work but there do exist solutions for scraping dynamic / JS-rendered content)

- extract all (or a subset of) external / outbound URLs (http/https)

- archive each external URL with a POST request to the Wayback Machine API

Fortunately, #Rstats-Twitter is always there to help out and Peter Meissner hinted at me with Salim Khalil’s RCrawler package (cf. Khalil/Fakir 2017).

3 “Minimum Viable Demo” - fetchURLs(target) & archiveURLs(URLs)

Rcrawler’s syntax might seem a bit unorthodox but the package is really feature-rich and we can actually solve Step 1 & 2 with it (and purrr). Having crawled all external link, all we have to do is to figure out how to send a POST request to the Wayback API, and can then work on refinement and parallelization.

3.1 Setup

library(tidyverse)

library(Rcrawler)

# use all CPU cores (~threads) minus 1 to parallelize scraping

cores = (parallel::detectCores() - 1)

print(paste0("CPU threads available: ", cores))## [1] "CPU threads available: 7"3.2 fetchURLs(url) - Function to crawl & scrape a single Website

Rcrawler() implicitly returns an

INDEXobjects to the global environment.INDEX$Urlcontains a list of URLs vectors.

fetchURLs <- function(url, ignoreStrings = NULL) {

# Crawl our target website's pages

Rcrawler(

Website = url,

no_cores = cores,

no_conn = 8, # don't abuse this ;)

saveOnDisk = FALSE # we don't the the HTML files of our own website

)

# extract external URLs from each scraped page

urls <- purrr::map(INDEX$Url, ~LinkExtractor(.x,

ExternalLInks = TRUE,

removeAllparams = TRUE))

# only keep external links

urls_df <- urls %>%

rvest::pluck("ExternalLinks") %>%

map_df(tibble)

# helper: optional vector with string/Regex expressions to filter out specific unwanted links

if (!is.null(ignoreStrings)) {

urls_df <- urls_df %>%

filter(!str_detect(`<chr>`, ignoreStrings))

}

urls_df <- urls_df %>%

select(`<chr>`) %>% # the column which contains the links

pull() %>% # we just need a single vector of URLs

unique() %>% # keep only unique

str_replace("http:", "https:") # make all links https

return(urls_df)

}3.3 archiveURL() - Function to archive a single URL with a POST request

TODO: Check first with

internetarchivepackage whether a link has already been archived, and iftruearchive only if archived link isn’tndays old.

TODO: return 2 items:

originalURL,archivedURL

archiveURL <- function(target_url) {

# Communiy-built Wayback Machine API / endpoint

endpoint <- "https://pragma.archivelab.org"

# Alterntive Approach might be to use a quick & dirty GET request

# https://github.com/motherboardgithub/mass_archive/blob/master/mass_archive.py

# the POST request to the API

response <- httr::POST(url = endpoint,

body = list(url = target_url),

encode = "json")

status_code <- response$status_code

# Only archive if POST request was succesfull

# TODO: needs more robustness, i.e. some returned paths are not valid

# Hypothesis: Not valid because URL was archived recently

# TODO: add check whether URL has been archived already

if (status_code == 200) {

wayback_path <- httr::content(response)$wayback_id # returns path

wayback_url <- paste0("https://web.archive.org", wayback_path)

print(paste0("Success - Archived: ", target_url))

} else {

wayback_url <- NULL

print(paste0("Error Code: ", status_code, " - couldn't archive ", target_url))

}

return(wayback_url)

}3.4 Wrapper function to archive a vector of URLs:

archiveURLs <- function(urls) {

result <- purrr::map_chr(urls, archiveURL) %>%

tibble(wayback_url = .)

return(result)

}3.5 Proof-of-Concept - Execution

3.5.1 Scrape external Links from the project website

Demo mode: only first 5 links

# target Website

url <- "https://chemicalweapons.gppi.net"

unwanted <- c("gppi|GPPi|dadascience|youtube|Youtube")

# scrape external Links & filter a particular string from results

urls <- fetchURLs(url, ignoreStrings = unwanted) %>%

head(5) # Demo to not abuse resources3.5.2 Archive external links and save as CSV

Each archiving step naturally takes some time. Making parallel async calls should be the next step.

archived_urls <- archiveURLs(urls)if (!dir.exists("archived")) dir.create("archived")

write_csv(archived_urls, "archived/archived-urls.csv")4 Questions/TBD

- How to parallelize archival (async calls are not supported by httr it seems, only curl?

- How to obey quota limitations (with ~setTimeout())?

- maybe use

internetarchiveto check first whether a URL has already been archived - or use generic API GET requests to retrieve URL, status and ID https://archive.readme.io/docs/website-snapshots

That’s it for today. Hope this is useful for some! If you have a proof-of-concept for making parallel async GET/POST requests, hit me up!